Today I attended csv,conf in Berlin, which turned out to be an excellent conference full of people who gather and transform data on a daily basis.

CSV, comma separated values, file format seems like a joke at first – who seriously uses that today in age of SQL, no-SQL and other $random-DB solution? It turns out that almost everybody at some point – either as input or as data interchange format in cases where systems are not part of your organisation.

Fail quickly and cheaply

A few different people presented their solution for “testing” CSV files, which might be better describe as making sure they conform to a certain schema. They range from just simple checks to full-fledged DSL that allows you to specify rules and even do checksums against referenced files.

The reason I liked most for this is that it allows you to very quickly verify sanity of files that you received and allows you to quickly give feedback to the other party that is sending you these files. This ensure that some time later either in time or inside your pipeline you don’t have to deal with bad data.

Embrace the UNIX philosophy – do one thing at the time

Most of the speakers also mentioned that in order to keep your sanity, you should build your system as a collection of small dedicated tools that pipe into other dedicated tools. It doesn’t necessarily have to be Unix pipe, but more a collection of steps that convert data into another step/database and then as next step does the processing again.

Everybody has the same problems

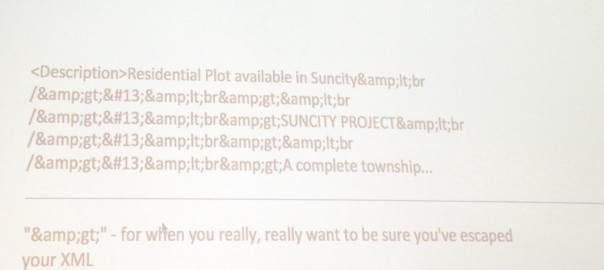

I think the biggest takeaway for me was, that we’re all having the same issues. We all get messy datasets that are hard to parse and are full of strange errors and inconsistencies.

As with other things, there is no silver bullet. We’ll have to build and teach best practices around data – testing, cleaning and what works and what doesn’t. Just we’re doing it in terms of modern software development.

Interesting tools and libraries

- https://github.com/crowdata/crowdata – Easily crowdsource the analysis of your documents

- https://github.com/scraperwiki/xypath – Navigating around a grid of cells like XPath for spreadsheets

- https://github.com/digital-preservation/csv-validator – CSV Validation Tool and API (CSV Schema RI)

- http://lunrjs.com/ – Simple full-text search in your browser